NIST Just Opened a Public RFI on Securing AI Agents

AI agents are moving from “helpful copilots” to systems that can plan, decide, and take action in production. The security industry has been warning for months that this transition changes the threat model: prompt injection, data poisoning, backdoors, and misaligned behavior don’t just distort answers — they can cause real, persistent changes in external systems.

Now the U.S. government is putting formal structure around that reality.

On January 12, 2026, NIST’s Center for AI Standards and Innovation (CAISI) published a Request for Information (RFI) seeking concrete guidance from industry and researchers on how to secure the development and deployment of AI agent systems. (NIST) The RFI was published in the Federal Register on January 8, 2026, and the public comment period closes March 9, 2026 (docket NIST‑2025‑0035). (Federal Register)

This is not a casual “thought leadership” exercise. It’s a standard-setting move — and it’s happening right as enterprises are experimenting with agentic automation in systems that were never designed for an untrusted reasoning loop.

There is no system more sensitive than Identity and Access Management.

What NIST is really doing here

CAISI’s RFI is scoped specifically to AI agent systems capable of taking actions that affect external state — meaning persistent changes outside the agent system itself. (Federal Register) That scope matters, because the security bar changes the moment an agent can:

- modify permissions,

- create credentials,

- rotate keys,

- approve workflows,

- change policies,

- or touch production infrastructure.

NIST is explicitly asking for input on:

- unique threats to agent systems (including indirect prompt injection, data poisoning, backdoors, and failure modes like specification gaming/misaligned objectives), (NIST)

- security practices and controls across model-level, agent system-level, and human oversight categories (including approvals for consequential actions and controls for handling sensitive and untrusted data), (Federal Register)

- how to measure and assess security (methods, evaluation approaches, documentation expectations), (Federal Register)

- how to constrain and monitor deployment environments (what the agent can access, how to monitor, how to implement rollbacks/undoes). (NIST)

NIST also makes it clear that the output of this process is intended to inform “voluntary guidelines and best practices” for agent security — the kind of guidance that quickly becomes a procurement checkbox, an audit expectation, and eventually (in some sectors) a regulatory requirement. (NIST)

Why this is happening now: “agent hijacking” is real, measurable, and still easy

This RFI didn’t appear in a vacuum. CAISI has already been publishing technical work on agent security — including a 2025 technical blog on agent hijacking, describing it as a form of indirect prompt injection where malicious instructions are inserted into data an agent ingests (emails, files, web pages, Slack messages), causing unintended harmful actions. (NIST)

That blog emphasizes a core security reality:

The architecture of many LLM agents requires combining trusted instructions with untrusted data in the same context — and attackers exploit that lack of separation. (NIST)

CAISI’s point is simple: if we can’t reliably prevent injection at the model layer, we must build system-level constraints and evaluation discipline that reduce blast radius and make incidents detectable and recoverable. (NIST)

IAM is the ultimate “external state” environment

If you want a single place where agent security has to be real — not demo-safe — it’s IAM.

IAM is the security layer that gates:

- every SaaS app,

- every cloud console,

- every privileged API,

- every admin action,

- and increasingly, every machine identity and automation identity.

So when an agent operates in IAM, it is operating in the control plane for the business.

That’s why the NIST framing (“agents that affect external state”) maps directly onto identity workflows:

- granting access,

- expiring time-bound entitlements,

- modifying group membership,

- enforcing policy,

- provisioning and deprovisioning accounts,

- rotating secrets,

- and creating/removing privileged identities.

In other words: IAM is where an agent goes from assistant to operator.

The emerging compliance posture: agents will be judged like safety-critical systems

NIST’s RFI is an early sign of how organizations will soon be expected to justify agent safety. Even before formal regulation lands, enterprise buyers will ask for proof that an agent system:

- Separates untrusted inputs from privileged actions Because if untrusted text can directly steer an execution tool, you have an exploit chain. CAISI explicitly focuses on risks that arise when “model outputs” are combined with software actions, including indirect prompt injection. (NIST)

- Implements least privilege and constrained environments The RFI calls out traditional best practices like least privilege and zero trust as relevant starting points, and asks how to constrain and monitor an agent’s access in its deployment environment. (Federal Register)

- Uses human oversight for consequential actions CAISI explicitly asks for “human oversight controls,” including approvals for consequential actions and network access permissions. (Federal Register)

- Can be evaluated and measured NIST is not just looking for opinions — it’s asking for methods and metrics to assess agent security during development and after deployment. (Federal Register)

- Has monitoring, detection, and rollback paths The RFI asks about monitoring deployment environments and implementing undoes/rollbacks/negations for unwanted action trajectories. (Federal Register)

This is the direction standards are heading: not “did you write a good system prompt,” but how is the system engineered to be safe even when the model fails?

The design constraint that matters in IAM: no “Ask→Read→Write” exploit chains

Security practitioners have converged on a pragmatic architecture lesson for agentic systems: you must prevent a single execution loop from simultaneously being exposed to:

- untrusted inputs,

- sensitive access,

- and the ability to change state.

This is the core of Meta’s widely discussed “Rule of Two” pattern (popularized through industry writeups and analysis): if an agent can read untrusted inputs and also access sensitive data and also act, prompt injection becomes a direct path to impact.

Whether or not you use that exact framing, it aligns tightly with what CAISI is asking for in the RFI:

- tool restrictions,

- approvals,

- permissioning boundaries,

- monitoring,

- and environment constraints. (Federal Register)

IAM is where this matters most, because the “tools” are literally privilege.

Why YeshID is positioned for the next phase of agent security

Most “agentic” product announcements start with a chat interface.

That’s backwards for IAM.

In identity, the interface is not the hard part. The hard part is building a system that can safely turn insight into action, with:

- policy grounding,

- approvals,

- auditability,

- and explicit boundaries between reasoning and execution.

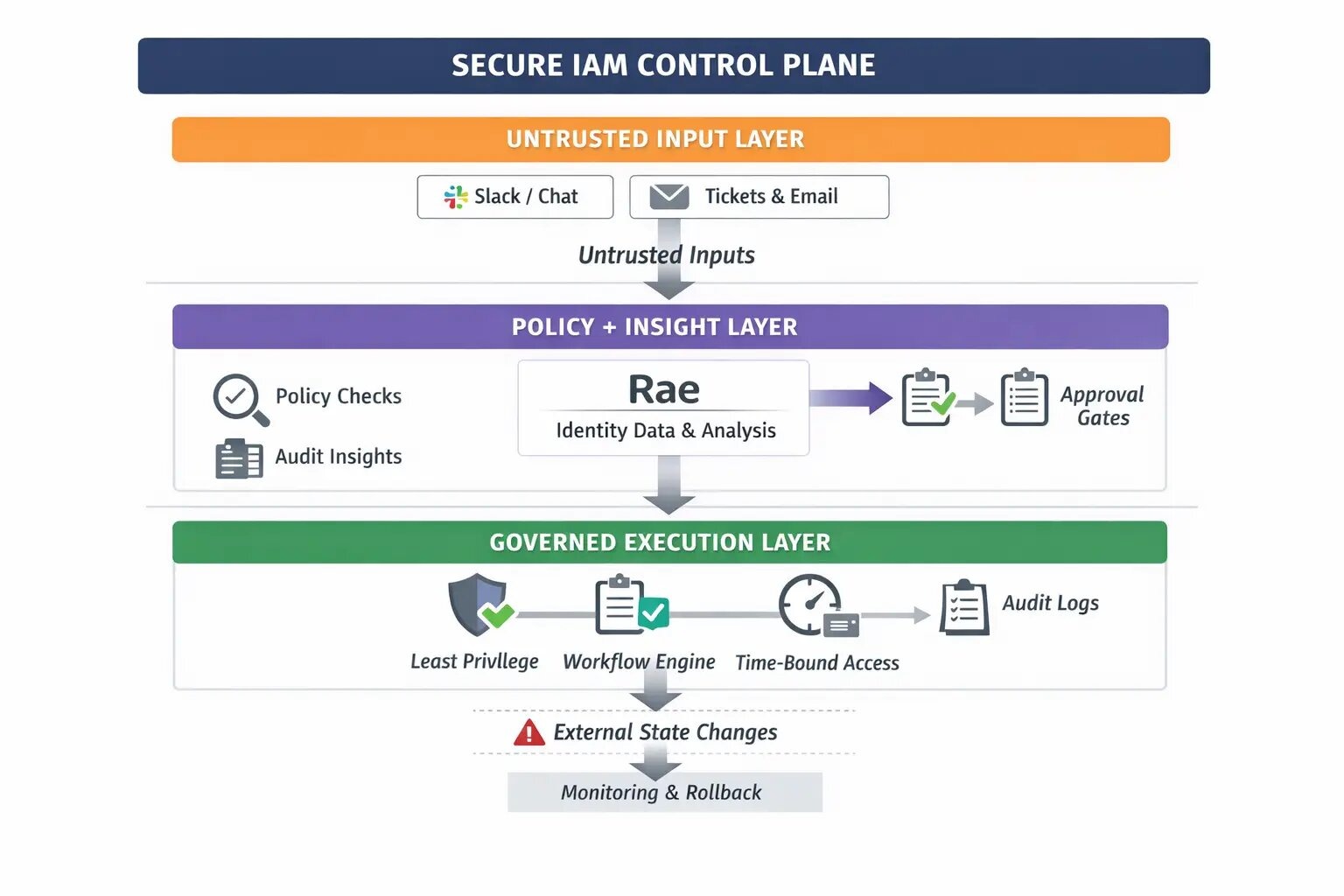

YeshID is built as an AI-native identity control plane — governing authentication, access, and lifecycle across humans, applications, and non-human identities. (YeshID) And Rae (YeshID’s IAM-native agent) is positioned explicitly around the boundary the NIST RFI is pushing the industry toward: separate insight from action, keep actions governed. (YeshID)

1) Clear boundaries between insight and action

YeshID’s Rae messaging is explicit: it “separates understanding from execution,” and any actions follow the same approvals, permissions, and safeguards as other identity operations — “no silent changes” and “no autonomous privilege escalation.” (YeshID)

That’s exactly the kind of control-plane guardrail enterprises will be expected to articulate as agent security standards mature.

2) Answers grounded in real identity data

Rae is described as answering questions using structured identity data across users, apps, groups, permissions, and history — with responses designed to be traceable and explainable. (YeshID)

This matters for two reasons:

- it reduces hallucinated “best guess” outputs, and

- it supports the audit and assessment posture NIST is asking about (what happened, why it happened, what data was used). (Federal Register)

3) Guarded workflows and approvals

YeshID supports access requests directly in Slack or Teams, with in-line approvals and time-based access that expires automatically — a built-in rollback mechanism for access grants. (YeshID)

In a world where agent actions increasingly need to be constrained, reversible, and governed, “time-bound by default” is not a convenience feature — it’s a safety feature.

4) Environment-integrated execution instead of freeform tool calling

NIST is explicitly asking how agents should be constrained in deployment environments and how monitoring/rollback should work. (Federal Register) YeshID’s approach is to put agentic capability inside an IAM control plane, not outside it.

That difference is foundational:

- a generic agent “calls tools,”

- an IAM-native agent operates inside a system designed to enforce policy, approvals, and traceability.

The takeaway: regulated expectations are coming — and IAM will be the test case

NIST’s RFI is the clearest public signal yet that “secure agentic systems” are becoming a defined engineering discipline, with standards, evaluation methods, and best practices on the way. (NIST)

IAM will be the highest-pressure proving ground because identity is the security layer for everything else — and because agents that can change access are, by definition, agents that can change external state. (Federal Register)

YeshID’s bet is aligned to that trajectory: agentic IAM is inevitable, but only systems designed for guardrails, policy grounding, approvals, and auditability will be deployable in the real enterprise.

Rae is built for that reality — not as a chatbot, but as governed identity automation inside an authoritative control plane. (YeshID)